The statistics are stark and sobering: on-line baby sexual abuse has reached disaster ranges, with reviews growing exponentially in recent times. As expertise evolves and turns into extra built-in into youngsters’s every day lives, so too do the dangers they face on-line. However alongside these challenges, innovation and collaboration supply new hope within the struggle to guard our children.

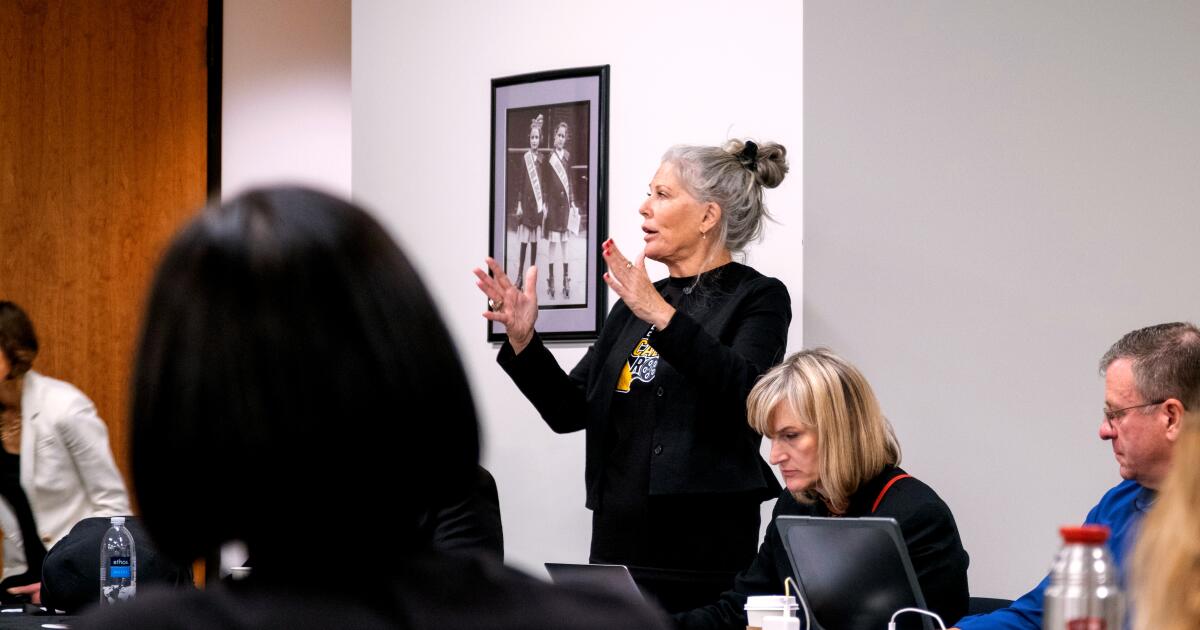

In a compelling episode of Objective 360 with Carol Cone, Thorn CEO Julie Cordua delves into this crucial situation, sharing insights from the frontlines of kid safety. The dialog explores how Thorn is leveraging state-of-the-art expertise and analysis to defend youngsters from on-line exploitation, whereas highlighting the roles that corporations, policymakers, and caregivers should play in making a safer digital world.

This well timed dialogue couldn’t be extra related as we navigate unprecedented challenges in baby security on-line. From rising AI threats to the rise of monetary sextortion, understanding these points—and the options being developed to handle them—is crucial for anybody involved about youngsters’s wellbeing within the digital age.

Transcript

Carol Cone:

I’m Carol Cone and welcome to Objective 360, the podcast that unlocks the ability of objective to ignite enterprise and social affect. In at the moment’s Objective 360 dialog, we’re going to handle an enormous public well being disaster that I imagine only a few of us are conscious of, and that’s baby sexual abuse on-line. We’re going to be speaking with an incredible not-for-profit, Thorn. They’re an revolutionary expertise not-for-profit creating merchandise and packages that fight baby sexual abuse at scale.

Let me provide you with a way of the size. In 2012, about 450,000 recordsdata of kid sexual abuse movies, photos, conversations had been on-line within the US alone. Quick-forward 10 years or so, there’s nearly 90 million, 90 million recordsdata on-line, and that’s impacting our kids in any respect ages. And sadly, nearly 70% of our youth by the point they’re graduating highschool, have been contacted, have had their belief damaged by a predator. That is a rare situation. We should all reply to it. I’ve one of many foremost leaders within the not-for-profit sector, Julie Cordua. And Julie, welcome to the present.

So, Julie, inform us about simply your position in doing superb not-for-profit work and what evokes you to do this work, RED and now at Thorn? After which we’re going to get actually deeply into what Thorn does.

Julie Cordua:

Oh, nice. Nicely, yeah, so good to see you once more. After I noticed you on the summit, it’s like, “Carol, it’s been years.”

Carol Cone:

I do know.

Julie Cordua:

So it’s very nice to reconnect and thanks for taking the time to cowl this situation. So I didn’t set out in my profession to work within the nonprofit area. I truly began my profession in wi-fi expertise at Motorola after which a startup firm known as Helio. And I beloved expertise. I beloved how briskly it was altering and I actually thought it might do good on this planet, join folks all world wide. After which out of the blue in the future I bought this cellphone name and the particular person stated, “Hey Julie, that is Bobby Shriver. I’m beginning one thing and I need you to return be part of it.” And on the time he was concepting with Bono this concept of RED, which was how do you are taking the advertising prowess of the personal sector, put it to work for a social situation?

And I assumed, “Ooh, if we might use advertising expertise to alter the best way folks on this planet have entry to antiretroviral therapy for HIV, that’s unbelievable. That’s unbelievable issues I might do with my expertise.” And so I joined RED and I discovered a ton. And what utilized to Thorn, my transfer to Thorn, was studying about how if we checked out social points or issues much less as, “Is that this a nonprofit situation or is that this a personal sector situation?” And extra at like, “Let’s take all of these expertise, all the perfect expertise from all kinds of society and put them in the direction of a difficulty. What might be finished?”

Carol Cone:

Thanks. And I really like the way you describe Thorn on the web site, as an revolutionary expertise nonprofit creating merchandise and packages that fight baby sexual abuse at scale. So why don’t you unpack {that a} bit and clarify to our listeners what’s Thorn after which we’re going to get into the entire particulars why it’s critically necessary for each single dad or mum, trainer, and applicable regulators to handle this situation. This situation can’t be unknown. It must be completely prevalent.

Julie Cordua:

Yeah. So baby sexual abuse in our society globally has dramatically modified over the past decade. Most baby sexual abuse at the moment has some type of a expertise part. And so that may imply that the documentation of the abuse of a kid is unfold on-line. That has been happening for for much longer than a decade. However over the past decade, we’ve seen the rise of grooming, of sextorsion. Now generative AI baby sexual abuse materials, of perpetrators asking youngsters or being attractive youngsters for content material with cash or items on-line. As my head of analysis says, geography was once a protecting barrier. When you didn’t reside close to or with an abuser, you wouldn’t be abused. That has been destroyed. Now, each single baby with an web connection is a possible sufferer of abuse.

And lots of the issues that we now have finished previously nonetheless maintain true. We have to discuss to our kids about these points, we have to discuss to oldsters, we have to discuss to caregivers, however we now have a brand new dimension that we should do, which is create a safer on-line atmosphere and create options at scale from a expertise perspective to create safer environments. And that’s what we do. So we merge social analysis with technical analysis with, that is the place the idea of personal sector considering is available in, with software program options at scale. And our entire purpose is that we may help cut back hurt and discover youngsters sooner, but in addition create safer environments so children can thrive with expertise of their lives.

Carol Cone:

Thanks. And I need only for our listeners, let’s discuss some numbers right here. In your web site, which is a superb web site, it’s preeminent, it’s superbly finished, very informative, not overwhelming. It helps mother and father, it helps youngsters, it helps your companions. So that you speak about 10 years in the past there have been like 450,000 recordsdata on-line that could be associated to baby sexual abuse, and now you say it’s as much as one thing like 87 million across the globe?

Julie Cordua:

That’s truly simply in the USA.

Carol Cone:

Oh, simply in the USA. Wow, I didn’t even know that.

Julie Cordua:

And the difficult factor with this crime is that we solely can rely what will get reported. So within the final yr, there was over 90 million recordsdata of kid sexual abuse materials, photographs and movies, that had been reported from tech corporations to the Nationwide Heart for Lacking and Exploited Kids, which is the place these corporations are required to report that content material. So should you’ve bought over 90 million recordsdata reported in a single yr, that’s simply what’s discovered, so there’s quite a lot of platforms the place this content material circulates the place nobody appears, and so it’s not discovered. So you’ll be able to think about that that quantity is way, a lot larger of content material that’s circulated. And in addition, that was simply the USA. So if we go to each different nation on this planet, there are tens of thousands and thousands, tons of of thousands and thousands of recordsdata circulating of abuse materials.

Carol Cone:

You recognize what I’d love you to do? The story you advised at SIS was so highly effective. Individuals, you may hear a pin drop within the room. May you simply give that quick story? As a result of I feel it talks in regards to the trajectory of how a toddler would possibly get pulled in to one thing that was seemingly simply easy and a couple of baby.

Julie Cordua:

Yeah. This story, clearly I’m not utilizing an actual baby’s identify, and I’d say the info are pulled from a number of sufferer tales simply to preserve confidentiality. However what we’re seeing with sextortion and grooming, how that is presenting, I imply it presents quite a lot of other ways, however a method that we’re seeing develop fairly exponentially proper now could be a toddler is on let’s say Instagram they usually have a public profile. And truly, the targets of this particular crime are actually younger boys proper now. So let’s say you’re a fourteen-year-old boy on Instagram, it’s a public profile. So a lady will get into your messages and says, “Oh, you’re cute. I like your soccer photograph.” After which strikes the messaging to direct messaging, so now it’s personal. And so they would possibly keep on Instagram or they could transfer to a distinct messaging platform like a WhatsApp. And this lady, and doing air quotes, begins sort of flirting with the younger boy, after which in some unspecified time in the future possibly shares a topless picture and says, “Do you want this? Share one thing of your individual.”

And this individual that the kid has friended on social media, they suppose is their buddy. And they also’ve truly sort of friended them on Instagram, possibly friended them on every other platforms they’re part of. And since they’re flirting, they could ship a unadorned picture. After which what we’re seeing is instantly this lady will not be a lady, this lady is a perpetrator. And that dialog adjustments from flirtation to predatory conversations and often turns into one thing like, “Ship me $100 on Venmo or Money App proper now, or I’ll ship that bare picture you despatched me to all your loved ones, your whole associates, each administrator at your college, and your coaches, as a result of I’m now associates with you on Instagram and I’ve all of their contacts.” And that baby, should you might think about, and I inform this story loads, and I had youngsters, feels trapped, feels humiliated.

And we see that these children usually do have entry to Money App or one other factor we’re seeing is that they use reward playing cards truly to do these funds at occasions, they usually’ll ship $100 they usually suppose it’s over, however the particular person retains going, “Ship me $10 extra, ship me $50 extra.” And so they’re trapped. And the kid appears like their life is over. Think about a 13 to 14-year-old child sitting there going, “Oh my God, what have I finished? My life is over.” And sadly, we now have seen too many instances the place this does finish within the baby taking their life.

Carol Cone:

Oh my God.

Julie Cordua:

Or self-harm or isolation, melancholy. And we’re seeing now, I feel it’s as much as about 800 instances per week of sextortion are being reported to the Nationwide Heart for Lacking and Exploited Kids proper now. And this can be a crime sort, after I speak about how baby sexual abuse has advanced, that is very completely different than the place we had been 15, 10 years in the past. And it’s going to require several types of interventions, several types of conversations with our kids, but in addition several types of expertise interventions that these corporations must deploy to be sure that their platforms aren’t harboring any such abuse.

Carol Cone:

So let’s take a deep breath, as a result of that’s astounding, that story, and {that a} baby would take their life or simply be so depressed and simply don’t know the way to get out of this. So first, let’s discuss in regards to the technological resolution. You’re working with quite a lot of expertise corporations and also you’re offering them with instruments. So what do these instruments appear like?

Julie Cordua:

Yeah. So we now have a product known as Safer, which is designed for tech platforms, basically trusted and security groups to make use of. That may be a specialised content material moderation system that detects picture, video, and text-based baby sexual abuse. And so corporations that, which I feel most corporations do, that wish to be sure that their platforms aren’t getting used to abuse youngsters, can deploy this and it’ll flag photographs and movies of kid sexual abuse that the world has seen. It would additionally flag new photographs, however it may well additionally detect text-based harms. So a few of this grooming and sextortion, in order that belief and security groups can get a notification and say, “Hey, sort of pink alert. Over right here is one thing that you could be wish to have a look at. There could be abuse taking place.” And so they can intervene and take it down or report it to regulation enforcement as wanted.

Carol Cone:

And the way have expertise platform corporations responded to Thorn?

Julie Cordua:

Nice. I imply, we now have about 50 platforms utilizing it now, and that’s clearly a drop within the bucket to what must occur. However I imply, our entire place is that each platform with an add button must be detecting baby sexual abuse materials. And sadly within the media, typically we see corporations sort of get hammered for having baby sexual abuse materials on their platform or reporting it. That’s the unsuitable strategy. The actual fact is that each single firm with an add button that we now have seen who tries to detect, finds baby abuse, and that signifies that perpetrators are utilizing their platforms for abuse. So it’s not unhealthy on the corporate that they’ve it, it turns into unhealthy once they don’t search for it. So in the event that they put their head within the sand and act like, “Oh, we don’t have an issue,” that’s the place I’m like, “Oh wait, however you do. So that you truly must take the steps to detect it.” And I feel as a society, we needs to be praising these corporations that take a step to really implement methods to detect this abuse and make their platform safer.

Carol Cone:

Would you wish to give any shout-outs to some exemplary platform corporations which have actually partnered with you?

Julie Cordua:

Yeah. I imply we now have, and that is the place I’m going to have to have a look at our listing so I be sure I do know who I can speak about. We’ve labored with quite a lot of corporations. You’ve an organization like Flickr who hosts quite a lot of photographs, who’s deployed it, Slack, Disco, Vimeo from a video perspective, Quora, Ancestry. Humorous, folks is like, “Ancestry?” However this goes again to the purpose I make is that in case you have an add button, I can nearly assure you that somebody has tried to make use of your platform for abuse.

Carol Cone:

Okay, so let’s discuss in regards to the folks a part of this, the dad or mum, and you’ve got so many fantastic merchandise, packages on-line, possibly it’s packages, for fogeys. Nicely, simply speak about what you supply since you’ve bought on-line, you’ve bought offline, you’ve bought messages to telephones. Mother and father, as you say, must have that trusting relationship with the kid. And I really like that you simply speak about that, as soon as a toddler, if it’s pre-puberty or such, once they get a cellphone of their hand, additionally they have a digicam and it’s very, very completely different from once we grew up. So what’s the perfect recommendation you’re giving to oldsters, after which how are mother and father responding?

Julie Cordua:

Yeah. I imply, so we now have a useful resource known as Thorn for Mother and father on our web site, and it’s designed to only give mother and father some sort of dialog starters and suggestions, as a result of I feel in our expertise in working with mother and father, mother and father are overwhelmed by expertise and overwhelmed in speaking about something associated to intercourse or abuse, and now we’re working on the intersection of all of these issues. So it simply makes it actually arduous for fogeys to determine, “What are the best phrases, when do I speak about one thing?” And our place is discuss early, discuss usually, cut back disgrace, and cut back concern as a lot as potential. Type of simply take a deep breath and notice that these are the circumstances round us. How do I equip my baby and equip our relationship, parent-child relationship, with the belief and the openness to have conversations?

What you’re aiming for, clearly that the very primary is not any hurt. You don’t need your child to come across this, however take into consideration that out in the actual world. If we had been to reside with a no-harm state, you might be defending your child extra like, “Don’t go on a jungle health club or one thing.” The truth is that youngsters can be on-line whether or not you give them a cellphone or not, they could be on-line at their buddy’s home or someplace else. So then should you can’t assure no hurt, what you wish to do is that if a toddler finds themselves in a tough scenario, they notice that they’ll ask for assist. As a result of return to that story I advised in regards to the baby who was being groomed. The explanation they didn’t ask for assist was as a result of they had been scared. They had been frightened of disappointing their mother and father, they had been frightened of punishment. We hear from children, they’re scared their units are going to get taken away, and their units are what join them to their associates.

And so how can we create a relationship with our baby the place we’ve talked overtly about what they may anticipate so that they know pink flags? And we are saying to them, “Hey, if this occurs, know that you may attain. I’m going that will help you it doesn’t matter what. And also you’re not going to be in bother. We’re going to speak about this. I’m right here for you.” I can’t assure that that’s at all times going to work, children are children, however you’ve created a gap, so the kid, if one thing occurs, they could really feel extra comfy speaking to their dad or mum. And so quite a lot of our sources are round like, “How can we assist mother and father begin that dialog, strategy their youngsters with curiosity on this area with security and actually decreasing disgrace, eradicating the disgrace from the dialog?”

Carol Cone:

Are you able to simply apply with me a bit of little bit of the kind of dialog? I’ve heard about this baby’s sexual abuse that’s taking place simply everywhere in the web. What do I do with my baby? How do I’ve a dialog with them?

Julie Cordua:

That may be a nice query to ask, and I’m glad you’re asking it as a result of one thing I’d say is don’t get your child a cellphone till you’re prepared to speak about tough topics. So ask your self that query. And should you’re prepared to speak about nudity, nude pics, pornography, abuse, then possibly you’re prepared to supply a cellphone. And I’d say earlier than you give the cellphone, speak about expectations for a way you utilize it, and in addition speak about among the issues that they could see on the cellphone. The cellphone opens up a complete new world. There could also be info on there that doesn’t make you are feeling good, that isn’t comfy. And if that ever occurs, know that you may flip that off and you may discuss to me about it.

You might also, I feel it’s actually necessary to speak to children once they have a cellphone about how they outlined a buddy and who’s somebody on-line? Is that particular person an actual particular person? Are you aware who they’re? Have you ever met them in particular person? Additionally speaking about what sort of info you share on-line. After which there’s a complete listing, and we now have a few of this on our web site, however then I’d say like, “These are conversations to have earlier than you give the cellphone if you give a cellphone each week after you give a cellphone.” However I’d additionally pair it with being inquisitive about your children’ on-line life. So if all of our conversations with our children are in regards to the concern facet, we don’t foster the idea that expertise will be good. And so additionally embrace, “What do you take pleasure in doing on-line? Present me the way you construct your Minecraft world. What video games are you taking part in?”

And assist them perceive, as a result of that security, that consolation, that pleasure that they take pleasure in speaking to you’ll create a safer area for them to open up when one thing does go unsuitable, versus each dialog being scary and menace based mostly. If we now have conversations like, “I’m inquisitive about your on-line life, what do you wish to be taught on-line? What are you exploring? Who’re your pals?” Speak about that for 10 minutes and have one minute be about, “Have you ever encountered something that made you uncomfortable at the moment?” So have the best steadiness in these conversations.

Carol Cone:

Oh, that’s nice recommendation. That’s actually, actually nice recommendation. And once more, the place can mother and father go surfing at Thorn? What’s the online tackle?

Julie Cordua:

Thorn.org and we now have quite a lot of sources on there from our Thorn for Mother and father work in addition to our analysis.

Carol Cone:

So are you able to speak about what you’re doing in regulatory actions? As a result of it’s actually necessary.

Julie Cordua:

Yeah, it’s actually fascinating to see what regulators world wide are doing. And completely different nations are taking completely different approaches. Some nations are sort of beginning to require corporations to detect baby sexual abuse and others are, and I feel that is the best way the US could go, but it surely’s going to take some time, are requiring transparency. And in order that to us is a real baseline is corporations needs to be clear in regards to the steps that they’re taking to maintain youngsters protected on-line. After which that provides mother and father and policymakers and all of us the flexibility to make knowledgeable about what apps our children use, what is occurring.

Carol Cone:

What’s your hope for the US in regulation contemplating the US continues to be scuffling with regulating expertise corporations general?

Julie Cordua:

I feel it’ll be some time within the US. They’ve bought loads happening, however I feel that first step of transparency can be key. If we will get all corporations to being clear in regards to the baby security measures that they’re putting in, that will be a giant large step ahead.

Carol Cone:

Nice, thanks. You’re so good when it comes to actually constructing a listening ecosystem, and I seen that you’ve got a Youth Innovation Council. Why did you create this and the way do you utilize them?

Julie Cordua:

I really like our Youth Innovation Council. You hearken to them discuss and also you sort of wish to simply say, “Okay, I’m going to retire. You are taking over.” I imply, we’re speaking about making a safer world on-line for our children. And this can be a world that we didn’t develop up with. So these children, is simply ingrained of their lives. And that is why I wish to at all times be actually cautious. I work on harms to youngsters, however I actually actually imagine that expertise will be useful. It’s useful to our society, it may be useful to children, it may well assist them be taught new issues, join with new folks. And that’s why I wish to make it safer to allow them to profit from all that.

And if you discuss to those children, they imagine that too they usually wish to have a voice in creating an web that works for them that doesn’t abuse them. And so I feel we might be remiss to not have their voices on the desk once we are crafting our methods, once we are speaking to tech corporations and coverage makers, it’s superb to see the world by means of their eyes, and I actually actually imagine they’re those dwelling on the web, dwelling with expertise as a core a part of their lives, that they need to be part of crafting the way it works for them.

Carol Cone:

So share with our listeners, what’s NoFiltr? As a result of that’s one in every of your merchandise that’s serving to youthful technology on-line to really fight sexual photographs.

Julie Cordua:

Proper. So NoFiltr is our model that speaks on to youth. And so we work with quite a lot of platforms that run prevention campaigns on their platforms, and the useful resource is usually direct to our NoFiltr web site. Now we have social media on TikTok and different locations talking on to youth, and our Youth Council helps curate quite a lot of that content material. However it’s actually about as an alternative of Thorn talking to youth, as a result of we aren’t youth voices, it’s children talking to youth about the way to be protected on-line, the way to construct good communities on-line, the way to be respectful, and the way to deal with one another if one thing occurs that isn’t what they need, or that’s our purpose.

Carol Cone:

So it’s not that you simply’re wagging a finger otherwise you’re scaring anybody to demise. You’re empowering every a type of audiences. And that’s a superb, good a part of how Thorn has been put collectively. I wish to ask in regards to the subsequent actually scary problem to all of us, and that’s AI, generative AI and the way that’s impacting extra imagery and extra baby sexual abuse across the globe, and the way you are ready to start to handle it.

Julie Cordua:

Yeah. So we noticed that one of many first purposes of generative AI was to create baby sexual abuse materials. To be honest, generative abuse materials had been created for a few years previously. However with the introduction about two years in the past of those extra democratized fashions, we simply noticed extra abuse materials being created. And truly, we now have an unbelievable analysis workforce that does authentic analysis with youth, and we simply launched our youth monitoring survey and located that one in 10 children is aware of somebody, has a buddy, or themselves have used generative AI to create nudes of their friends. So we’re seeing these fashions be used each by friends as in youth, they suppose it’s a prank. We all know clearly it has broader penalties, all the best way to perpetrators utilizing it to create abuse materials of youngsters that they see in public.

And so truly, one of many first issues we did a yr in the past was convene a couple of dozen of the highest gen AI corporations to actively design rules by which their corporations and fashions can be created to cut back the probability that their gen AI fashions can be used for the event of kid sexual abuse materials. These had been launched this previous spring, and we’ve had lots of these corporations begin to report on how they’re doing towards the rules that they agreed to. Issues like, “Clear your coaching set, be sure there’s no baby sexual go materials earlier than you practice your fashions on information. When you’ve got a generative picture or video mannequin, use detection instruments at add and output to be sure that folks can’t add abuse materials and that you simply’re not producing abuse materials.”

Issues get harder with open-source fashions, not OpenAI, however open-source fashions as a result of you’ll be able to’t at all times management these elements. However there are different issues you are able to do in open-source fashions like cleansing your coaching dataset. Internet hosting platforms can guarantee they’re not internet hosting fashions which might be identified to supply abuse materials. So each a part of the gen AI ecosystem has a task to play. The rules are outlined, they had been co-developed by these corporations, so we all know they’re possible, and they are often carried out proper now out of the gate.

Carol Cone:

Good work, and it’s terrific that you simply’ve gotten these rules finished. Actually, you’re in service to empower the general public and all customers, whether or not it’s adults or youngsters or such. I imply, you’ve been doing this work brilliantly for over a decade. What’s subsequent so that you can sort out?

Julie Cordua:

I imply, this situation would require perseverance and persistence. So I really feel like we now have created options that we all know work. We now want broader adoption. We’d like broader consciousness that this is a matter. I imply, the very fact is that globally, we all know that almost all of youngsters, by the point they attain 18, can have a dangerous on-line sexual interplay. Within the US, that quantity’s over I feel 70%. And but, we as a society aren’t actually speaking about it on the degree that we have to speak about it. And so we have to incorporate this as a subject. You opened the phase with this, this can be a public well being disaster. We have to begin treating it like that. We should be having coverage conversations, we should be fascinated by it on the pediatric physician degree. When you go in for a checkup, we’ve finished a very good job incorporating psychological well being checks on the pediatric checkup degree.

I’ve been considering loads about how do you incorporate any such intervention? I don’t know precisely what that appears like. I’m positive there’s somebody smarter on the market than me who can take into consideration that. However we now have to be integrating this into all elements of a kid’s life as a result of as I stated, expertise is built-in into all elements of a kid’s life. So fascinated by this not simply as one thing a dad or mum has to consider or a tech firm, however docs, policymakers, educators. So it’s a reasonably new situation. I imply, I’d say after I was working in international well being, we’ve been attempting to sort out international well being points for many years. This situation is sort of a decade previous, if you’ll. I’d say we’re nonetheless a child, but it surely’s rising quick and we now have no time to attend. Now we have to behave with urgency to boost consciousness and combine options throughout the ecosystem.

Carol Cone:

Good. I’m simply curious, this can be a robust situation, this can be a darkish situation. You’ve bought children proper within the zone. How do you keep motivated and optimistic to maintain doing good work?

Julie Cordua:

Oh, thanks. You see the outcomes day by day. So if I’m ever getting discouraged, I attempt to sort of be sure I am going discuss to an investigator who makes use of our software program to discover a baby. I talked to a dad or mum who has used our options to create a safer atmosphere for his or her children or a dad or mum who would possibly even be struggling, they usually give me the inspiration to maintain working as a result of I don’t need them to battle. Or I talked to the tech platforms. I truly suppose… So 13 years in the past we began working. I stated there have been no belief and security groups. So we had been working with these engineers who had been being requested to search out 1000’s of items of abuse, they usually had no expertise to do it. One factor that provides me hope is we’re sitting right here on the creation of a brand new technical revolution with AI and gen AI, and we even have engaged corporations. Now we have belief and security groups, we now have technologists who may help create a safer atmosphere.

So I’ve been in it lengthy sufficient that I get to see the progress. I get to fulfill the people who’re doing even tougher work of recovering these youngsters and reviewing these photographs. And if I could make their lives higher and provides them instruments to guard their psychological well being in order that they’ll do that arduous work, I really feel like I’m of service. And in order that progress helps. After which I’ll say for our workforce, one factor that’s actually inspiring is we are saying this loads. I imply, we now have a workforce of just about 90 at Thorn that work on this. And also you don’t go to school and say, “I wish to work on one of many darkest crimes on this planet.” And all of those folks have given their time and expertise to this mission, and that’s extremely inspiring, and we provide quite a lot of wellness providers and psychological well being for everybody. However I’d say it’s the progress that retains me going. However we do supply quite a lot of psychological well being providers and different wellness providers for our staff.

Carol Cone:

That’s so good. So this has been an incredible dialog. I’m now a lot better versed on this situation, and I assumed I knew all social points since I’ve been doing this work for many years. So thanks for all the nice work you’re doing. I at all times love to offer the final remark to my visitor, so what haven’t we mentioned, it might be feedback, that our listeners must learn about?

Julie Cordua:

There’s just a few issues. Generally this situation can really feel overwhelming and persons are sort of like, “Ah, what do I do?” If you’re at an organization that has an add button, attain out as a result of we may help you determine the way to detect baby sexual abuse. Or should you’re a gen AI firm, we may help you pink workforce your fashions to be sure that they don’t seem to be creating baby sexual abuse materials. If you’re a dad or mum, take a deep breath, have a look at some sources, and begin to consider the way to have a curious, calm, participating dialog along with your baby with the aim initially to only open up a line of dialogue in order that there’s a security internet there and also you begin to do this frequently.

And should you’re a funder and suppose, “This work is fascinating,” our work is philanthropically funded, so it’s a tough situation to speak about, we talked about that, and I actually do suppose those that be a part of on this struggle are courageous to take this on, as a result of it’s a tough situation and we’ve bought an uphill battle, however it’s our donors and our companions who make it potential.

Carol Cone:

You might be actually constructing a group, a really, very highly effective group with company and merchandise and instruments, and you’re to be counseled. And I’m so glad we bumped into one another at Social Innovation Summit. The brand new playground is expertise and screens, and that’s the place youngsters are hanging out and we have to defend our kids. I do know there’s much more work to do, however I really feel a bit of bit extra calm that you simply’re on the helm of constructing this superb ecosystem to handle baby sexual abuse on-line. So thanks, Julie. It’s been an awesome dialog.

Julie Cordua:

Thanks a lot. Thanks for having the dialog on and being keen to shine a light-weight on this. It was fantastic to reconnect.

Carol Cone:

This podcast was dropped at you by some superb folks, and I’d like to thank them. Anne Hundertmark and Kristin Kenney at Carol Cone ON PURPOSE. Pete Wright and Andy Nelson, our crack manufacturing workforce at TruStory FM. And also you, our listener, please fee and rank us as a result of we actually wish to be as excessive as potential as one of many high enterprise podcasts out there in order that we will proceed exploring collectively the significance and the activation of genuine objective. Thanks a lot for listening.

This transcript was exported on Sep 05, 2024 – view newest model right here.

p360_Thorn RAW (Accomplished 09/05/24)

Transcript by Rev.com

Web page 1 of two